Editor’s note: Large Language Models (LLMs) that only work with text process information in a linear and symbolic way. On the other hand, generative multimodal AI is a big step toward “decision intelligence.” These models create a shared internal view of the world by processing different kinds of data at once, like text, pictures, sound, video, and sensory signals. Therefore, these models enable you to fully utilize sensory technology in your primary business domains. This article gives you tips and information about generative multimodal AI in terms of the most important features, uses, risks, and best practices for five key industries: Fintech & Banking, Logistics & Public Sector, Smart Home IoT, Travel Tech, and Healthcare & Medtech.

High-Utility Generative Multimodal AI Across Verticals

The “General Purpose” model will end in 2026, and the “Vertical Mastery” model will begin. The reasoning engine is built into foundational models, but they don’t have the data you need to make important decisions in your field. High-utility generative multimodal AI is about making these powerful engines better and more limited so they can get really good at certain things. For example, a general model knows what a traffic light is, but a high-utility smart city agent knows how traffic moves at 5 PM on a rainy Tuesday and can change the timing of signals in real time using live video feeds and sensor data.

Companies have “dark data” that they haven’t made money from yet, and training models on these proprietary, multimodal datasets makes this vertical mastery possible. This includes decades of medical imaging in healthcare, terabytes of seismic data in energy, and thousands of hours of customer service calls in banking. The switch to high-utility AI is also about trust and dependability. Trust is what makes the new AI economy work. Users and those interested in AI will only empower it if it can consistently handle challenging situations. High-utility multimodal models build this trust by showing why they made their choices.

In this kind of AI technology world, Trustify Technology’s AI engineer team helps clients build a competitive moat that generic competitors can’t cross by designing applications that use these rich, multi-sensory resources.

Regulated Precision: Agentic Compliance in Fintech & Medtech

“Zero Trust” is a basic idea in modern cybersecurity, and multimodal AI is the key to using it in Fintech and Medtech. Text-based passwords and simple two-factor authentication (2FA) are no longer enough to navigate a world filled with deepfakes and fake identities. “Regulated Precision” requires a “Zero-Trust” approach to identity verification that relies on intrinsic, multimodal biology.

Trustify Technology achieves “regulated precision” by directly integrating these forensic tools into its AI architecture. We safeguard our models from manipulation by subjecting them to deepfakes and synthetic data during their creation. This feature is called “adversarial training.” We provide our clients with the necessary regulatory protection to utilize these technologies by demonstrating that our AI agents can distinguish between a real person and a digital fake more accurately than a human reviewer. This process makes a safe, compliant environment where new ideas can grow without breaking the strict rules of the medical and financial fields.

Resilient Operations: Autonomous Supply Chains & Edge IoT

The “Dark Warehouse” is what resilient operations are all about. It is a fully automated facility where AI mostly controls the flow of goods without any help from people. This level of perception is only possible with multimodal AI. In a warehouse, the term means robots with multimodal vision models that can find broken boxes, read faded labels, and move around spilled liquids without stopping the line.

At Trustify Technology, we build these systems with the main idea of autonomous orchestration in mind. We make agents that act like the facility’s brain. They watch video feeds to see if there are any traffic jams in the workflow, listen to audio from conveyor belts to find worn-out parts (predictive maintenance), and process inventory logs to make slotting more efficient. For instance, the AI automatically sends autonomous mobile robots (AMRs) to help when it “sees” a backlog at the packing station. This keeps the load balanced in real time.

This level of independence makes the supply chain “anti-fragile,” which means it gets stronger when things get tough. During busy times, the system makes itself more efficient instead of breaking down. The system knows the context of operations by processing multimodal inputs. It can tell the difference between a normal busy time and a critical bottleneck. This lets you make more nuanced decisions that used to need a lot of experience as a warehouse manager.

Anticipatory Service: Hyper-Personalization in Travel Tech

Picture a traveler posting a picture of a sunset in Santorini and saying, “Find me a place that feels like this, but in the Caribbean, for less than $5,000.” A model that solely relies on text would face significant challenges. A high-utility multimodal model looks at the image’s aesthetics (whitewashed walls, blue domes, sea view), checks the budget and location limits (text), and then searches the global inventory to make a custom itinerary.

The travel industry is undergoing a paradigm shift from “Search” to “Service.” For decades, travelers have had to act as their own project managers, piecing together flights, hotels, and activities. The future belongs to the “Agentic Concierge,” which is an AI that not only answers questions but also acts as a direct assistant to plan, book, and manage the entire journey. This concierge is powered by multimodal AI, which allows it to understand the traveler’s intent through natural conversation (voice/text) and visual inspiration.

This “hyper-personalization” is looking to the future. It doesn’t wait for the user to ask. The agent uses structured data about the user’s past trips and their current situation (location, weather) to offer services. This transformation shifts the travel app from being merely a tool to becoming a friend, which enhances customer loyalty and increases their spending by creating “moments of magic” that feel personalized.

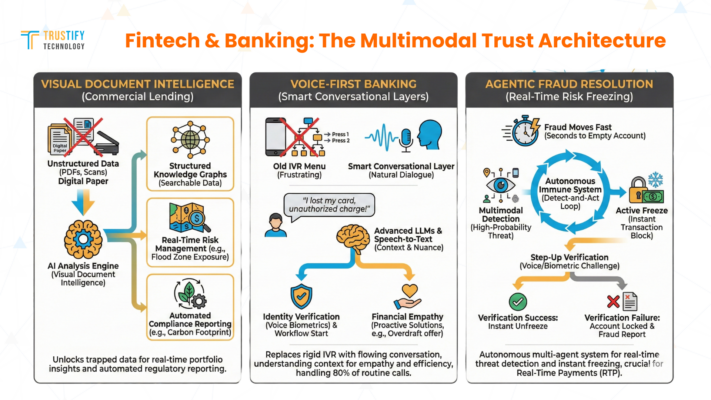

Fintech & Banking: The Multimodal Trust Architecture

In Fintech and Banking, trust is the most important thing, but the ways to build it are changing a lot. For a long time, the only ways to keep things safe were with passwords, PINs, and single-factor authentication. These aren’t enough to stop the problem of deepfakes and fake identities from getting worse. The “Multimodal Trust Architecture” is the next big thing in Fintech. It will check your identity using more than one secret, such as behavioral and biometric signals. Deloitte’s study of agentic AI in banking shows that adding autonomous agents to these processes is not just an improvement; it is necessary to deal with the complexity of modern financial crime.

Our Trustify Technology AI engineer team can help your financial business build this new level of trust by using multimodal AI agents that work as “Continuous Authenticators.” These agents check the user’s session in real time, looking at the cadence of keystrokes (behavioral), the stress in a voice command (audio), and the device’s geolocation context (sensor data). This procedure is different from a traditional login check. This feature lets banks switch from “binary” security (access granted or denied) to “risk-adaptive” security. If a user logs in from a new location and their voice print and navigation patterns match their past profile perfectly, the agent lets them in without any problems. If the patterns don’t match, it becomes a biometric challenge. This is “High-Utility” AI at work: it makes banking safer and easier by improving security and getting rid of the need for passwords at the same time.

Visual Document Intelligence for Commercial Lending

The most valuable thing a bank has is its data, but for business lenders, most of that data is stuck in “digital paper,” which is PDFs and scanned images. The bank’s analytics engines can’t see this unstructured data. “Visual Document Intelligence” makes such tasks possible by turning unstructured documents into structured, searchable knowledge graphs.

Imagine a bank that wants to know how much of its business is in a certain flood zone’s retail sector. In the past, this would have meant going through thousands of property appraisals by hand. With Visual Document Intelligence, an agent can quickly “read” all the appraisals, find out where the property is and how much it is worth, compare it to a flood map, and make a risk report. This “portfolio intelligence” helps banks manage risk in real time, so they don’t have to wait for quarterly reports to see how the market is changing.

This feature also makes it easier to report to the government. When new rules say that banks have to show the carbon footprint of their loan book, multimodal agents can automatically figure out the portfolio’s emissions by looking at environmental reports and utility bills that borrowers send in. This technique transforms compliance from a daunting task into a straightforward, automated process.

Voice-First Banking: The End of the IVR Menu

“Press 1 for balances, press 2 for transfers” is an old-fashioned Interactive Voice Response (IVR) menu. Customers who just want to fix a problem find it very frustrating. Deloitte’s research on AI in banking points to a move toward smart, conversational layers that sit on top of older systems. “Voice-First Banking” does away with the rigid menu tree and replaces it with a conversation that flows and grows. A customer calls and says, “Someone used my card at a gas station after I lost it.” The AI knows what the user wants to do (report a lost card and dispute a transaction), verifies their identity using voice biometrics, and starts the workflow right away.

These “conversational cores” use advanced speech-to-text and large language models (LLMs) that are tailored for banking taxonomy. These agents are different from old chatbots that only understood keywords. They also understand context and nuance. If a customer says, “I’m a little short for rent this month,” the agent can guess that they need cash and offer them a temporary overdraft increase or the option to skip a payment. Such an approach changes the interaction from “transaction processing” to “financial empathy,” which calms the customer’s nerves and answers their question.

This change brings in a lot of money by keeping calls from human agents. According to Google Cloud’s ROI report, agents with a lot of value can double productivity. The voice-first system takes care of 80% of routine questions on its own, leaving people with empathy for the more difficult, emotional cases, such as fraud recovery or death. It changes the contact center from a cost center to a way to keep customers coming back.

Agentic Fraud Resolution: Freezing Risks in Real-Time

Fraud moves very quickly. You can empty an account in seconds with a compromised credential. Machine learning is quick to flag an event as fraud, but it often takes a human analyst or a customer confirmation text to resolve the issue. This scenario is where the money goes missing. Deloitte’s report “Agentic AI in Banking” talks about a future where multi-agent systems can look into and fix AML alerts on their own. “Agentic Fraud Resolution” closes the time gap by giving the AI the power to act. It is an “autonomous immune system” that stops threats right away.

Using a “detect-and-act” loop, our AI engineer team at Trustify Technology can build these systems. When a multimodal model sees a high-probability account takeover (based on location, device fingerprint, and behavioral biometrics), the agent doesn’t just send an alert. It actively “freezes” the rails for outgoing transactions. The agent then starts a “Step-Up” verification workflow, which either calls the customer (Voice AI) or sends a biometric challenge to their device you can trust. It instantly unfreezes the money if the verification goes through. If it doesn’t work, it locks the account and writes up the fraud report.

For “Real-Time Payments” (RTP) networks, where transactions are unchangeable, the ability to act independently is crucial. Reversing a mistakenly sent wire is impossible. The defense has to be just as quick as the payment. Banks can take part in the RTP revolution without risking huge losses from fraud by using agents that can make decisions in less than a microsecond to block or release funds. The system incorporates the necessary “safety brakes” to ensure speed.

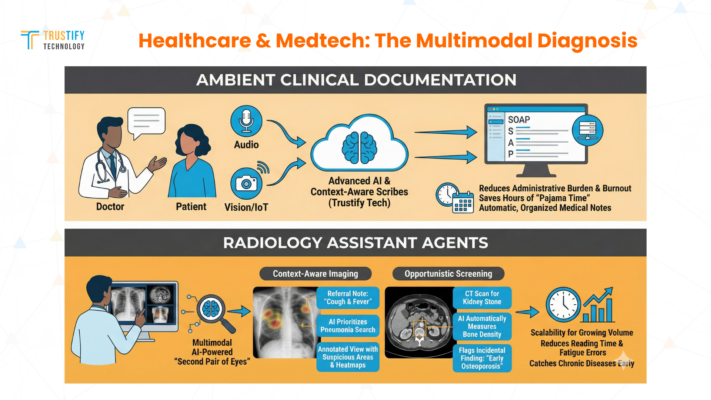

Healthcare & Medtech: The Multimodal Diagnosis

The keyboard and screen have been the main ways for healthcare workers to interact with patients for too long. This implies that doctors must disengage from patients to feed the machine. High-Utility Generative Multimodal AI brings back the human connection by acting as an “intelligent interface” that runs in the background.

The keyboard and screen have been the main ways for healthcare workers to interact with patients for too long. This implies that doctors must disengage from patients to feed the machine. High-Utility Generative Multimodal AI brings back the human connection by acting as an “intelligent interface” that runs in the background.

It has become very helpful in healthcare to be able to easily handle unstructured inputs like voice notes and pictures. This means using agents that can “see” and “hear” the clinical encounter, which makes data entry easier and lets doctors focus on healing.

Multimodal agents are capable of listening to the conversation and distinguishing between a symptom description and casual conversation. They watch the physical exam through secure vision sensors (when allowed) and write down any changes in skin color or range of motion. This information is then put together into a structured clinical note that only needs a quick look and a signature. Wolters Kluwer says that this change is important for helping nurses avoid burnout and learn new skills. This approach will free up clinicians to do the complex decision-making and empathetic communication that no AI can replace by automating the low-value administrative tasks.

Ambient Clinical Documentation

The most frequent complaint from both patients and physicians today is the loss of eye contact. The Electronic Health Record (EHR), designed to improve care, has inadvertently turned doctors into data entry clerks. Wolters Kluwer’s 2026 Healthcare AI Trends identifies this “administrative burden” as a primary driver of clinician burnout. “Ambient Clinical Documentation” is the technological antidote. It uses advanced AI to listen to the natural conversation between the doctor and patient (audio) and see the clinical context (vision/IoT), automatically turning this informal interaction into a clear, organized medical note.

In this setting of AI-driven technology, our Trustify Technology AI expert team helps your Healthcare/Medtech operational teams build these systems to be “context-aware scribes”. An ambient agent knows how a medical interview should go, unlike simple dictation software, which needs specific commands. It knows when the doctor is asking about the patient’s history (“When did the pain start?”) and when they are giving instructions (“Take the medicine twice a day”). It gets rid of small talk about the weather.

Multimodal AI processes these different signals to create a single understanding. The result is a draft note called Subjective, Objective, Assessment, Plan (SOAP) that shows up in the EHR a few minutes after the patient leaves. This system saves doctors hours of “pajama time,” which is the late-night paperwork that drives them to their limits. They can get their evenings and mental health back.

Radiology Assistant Agents

Radiology is having a problem with volume. Every year, the number of medical images made is growing quickly, but the number of radiologists stays the same. Multimodal AI-powered Radiology Assistant Agents are the answer to this problem with scalability. These agents don’t take the place of the radiologist. Instead, they act as a “Second Pair of Eyes” that never gets tired, pre-reading scans to find the most important cases and point out small problems.

For example, when looking at a chest X-ray, the agent doesn’t just look for “any” problem; it looks for the problems that were mentioned in the referral. The agent looks for pneumonia consolidation first if the clinical note says “cough and fever.” It looks for lumps if the note says “weight loss.” This “context-aware imaging” makes things much more touchy. The AI gives the radiologist an “Annotated View” that shows bounding boxes around areas that look suspicious, heatmap overlays for density, and direct links to the patient’s history. This lets the human expert focus right away on the pathology, which cuts down on reading time per case and mistakes caused by tiredness.

This technology is especially important for “opportunistic screening.” A CT scan of the abdomen for a kidney stone might also show the lower spine. A human radiologist concentrating on the kidney may overlook initial indications of osteoporosis in the spine. A multimodal agent can automatically measure bone density and flag it as an “incidental finding” by looking for “incidental findings.” For example, “Incidental finding: Low bone density suggesting osteoporosis.” This makes every scan a full health check, catching chronic diseases early when they can be treated.

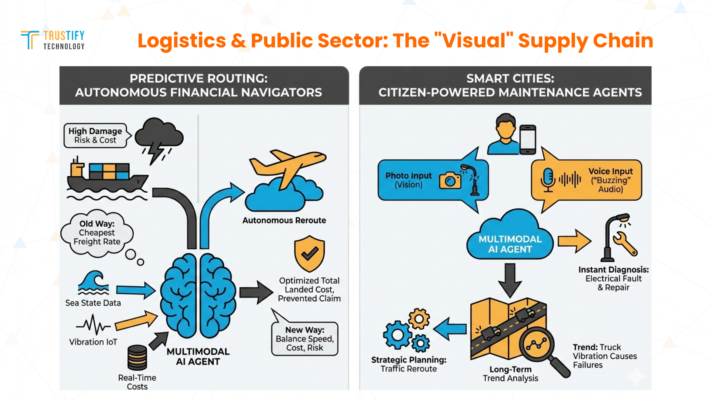

Logistics & Public Sector: The “Visual” Supply Chain

In the complicated world of global trade, the “Visual Supply Chain” shows how things have changed from being overseen by people to being run by agents. The traditional supply chain suffers from fragmentation, relying on incompatible email updates, PDFs, and dashboards. Real work includes screenshots of errors, log extracts, and voice notes from field technicians, which are all types of unstructured data that old systems can’t handle. High-utility multimodal AI combines these different signals into a clear operational picture.

When partnering with Trustify Technology, our AI experts help your business team build systems where “Digital Employees” (AI Agents) constantly scan this visual and textual data to make sure that the physical flow of goods matches the digital record. This alignment is crucial for “Resilient Operations.” The “FutureScape” report from IDC says that agentic AI will get smarter and get more infrastructure that can handle stress. In logistics, this means sending out agents who can look at many assets at once.

Picture a group of drones flying around a warehouse. The multimodal agent doesn’t just count boxes; it also sees crushed packaging (vision), reads the labels to check expiration dates (text), and listens to the hum of the conveyor belt to guess when it will break down (audio). This “sensory” oversight makes “dark warehousing” possible. In these fully automated warehouses, AI controls the movement of goods with little help from people.

Predictive Routing with Multimodal Inputs

The cheapest route in logistics is often the most expensive if it causes a factory to go down. With predictive routing and multiple inputs, businesses can optimize for “total landed cost” instead of just freight rates. Maersk’s talk about decision intelligence shows how important it is to know the costs and risks along the way.

Multimodal agents serve as “financial navigators.” These agents continuously evaluate the trade-offs between speed, cost, and risk by analyzing diverse data sources that a human dispatcher could never synthesize in real time.

DHL Global stresses that AI will take care of these “autonomous decision-making” jobs. The agent books the air transfer ahead of time, which stops a million-dollar insurance claim for damaged goods. This decision is based on the specific multimodal evidence: “Vibration threshold predicted to exceed safety limits due to sea state”.

Smart Cities: Infrastructure Maintenance Agents

The best sensors in a city are its people, but the way to report problems is often slow and bureaucratic. IDC says that half of all local governments will spend money on improving LLMs so that they can get more value from data.

For example, “Multimodal Citizen Kiosks” and apps can be “Maintenance Agents.” People can report problems with infrastructure in a way that feels natural and sensory using these interfaces. Instead of filling out a form, a citizen takes a picture of a broken streetlight or leaves a voice message saying, “The light on 5th and Main is flickering and making a buzzing sound.” The multimodal agent processes these inputs right away. It uses vision analysis to identify the streetlight type and damage. It looks at the sound to find the “buzzing,” which means there is a problem with the electricity.

The combination of this multimodal data also lets city planners see long-term trends. The agent might notice that streetlights in a certain area break down more often because of the vibrations from heavy trucks (based on audio and sensor data). This could lead to a review of traffic routing.

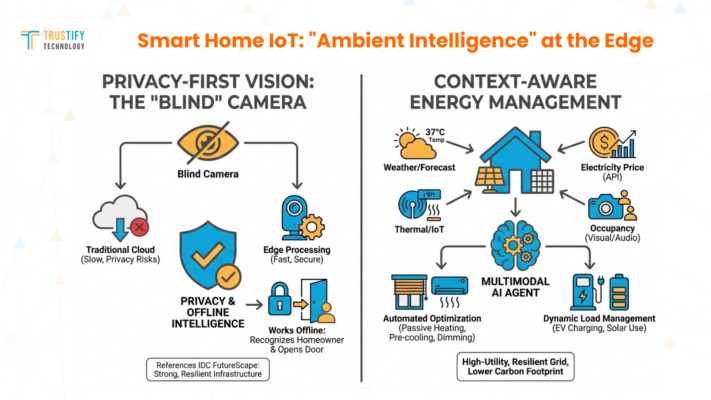

Smart Home IoT: “Ambient Intelligence” at the Edge

We’re now in the “Self-Healing Home” age, when appliances break and know when they’re going to break and make plans to fix themselves. This is “predictive maintenance” for consumers, and it works with multiple types of AI. Multimodal models can use audio notes and log extracts to automate support cases. We use this logic on the machine itself.

McKinsey’s definition of multimodal AI highlights the ability to process several inputs to form a nuanced perception. The perception here is of “Machine Health.” The agent can even send a sound clip of the broken motor to the manufacturer’s cloud to confirm, making sure that the technician brings the right part. This feature gets rid of the “diagnosis visit,” which saves both the customer and the service provider time and money.

This “agentic” ability changes the way people own things. Bain & Company says that “Agentic Commerce” shows that people are more and more willing to let AI do things for them. By automating the maintenance lifecycle, these agents help appliance makers go from selling a “product” to selling “uptime.” The refrigerator that orders its own water filter or the HVAC system that schedules its own tune-up based on how often you use it (not just a calendar) is the best example of “Ambient Intelligence.” This technology operates seamlessly, often going unnoticed until it becomes a lifesaver.

Privacy-First Vision: The “Blind” Camera

When traditional IoT devices send a lot of raw data to the cloud for processing, it can slow things down and create privacy problems. “Privacy-First Vision” keeps the data close to home. To handle multiple types of input, like images and logs, you need a strong architecture. Performing this processing at the edge is the most efficient approach.

This processing in the area also makes “offline intelligence” possible. A smart lock should still work even if the internet is down. We host the multimodal agent on the device to make sure that the “Blind Camera” keeps working even when there are power outages. It can still open the door and tell who the homeowner is. According to IDC’s “FutureScape” report, agentic AI will be the driving force behind strong infrastructure. This means that safety systems in the home can still work even if the internet goes down.

Context-Aware Energy Management

Traditionally, energy management has been passive, like a programmable thermostat that follows a strict schedule. Context-aware energy management makes the home a part of the energy grid. Multimodal AI gives the home the ability to “sense” its own energy needs in real time.

The agent combines different pieces of information, such as the weather forecast (text/data), the current price of electricity (API), the house’s thermal retention (IoT sensors), and the occupancy status (visual/audio). The agent opens the door if it “sees” that the sun is shining and the house is empty. Using cheap solar power, smart blinds can passively warm the room and cool the house before you get there. When it detects grid stress and high prices, it automatically dims the lights and turns on the AC to save energy. This AI is “high-utility” and pays for itself.

This ability is necessary for “Electrification of Everything.” Adding EV chargers and heat pumps to homes complicates the electrical load. A multimodal agent dynamically handles this load. It makes sure the car only charges when rates are low or when the solar panels on the house are making more energy than they need. This approach is in line with IDC’s ideas about resilient cities, as smart homes become part of a decentralized, resilient grid. By making the most of energy use at the edge, we make national infrastructure less stressed and lower the carbon footprint of everyday life.

Travel Tech: The “Generative” Concierge

The “Generative Concierge” is the next step for the travel industry after the “booking engine.” People who travel don’t just want to find flights anymore; they want a company that knows their dreams and the problems they have with travel. The change from “adviser” (GenAI) to “direct report” (Agentic AI) is what McKinsey’s report “Remapping Travel with Agentic AI” calls it. A “Generative Concierge” doesn’t just give you a list of options; it “orchestrates” the whole trip.

iSeatz says that “orchestration” is the key to making this time work. The agent needs to connect the idea to the action. This level of customization, which comes from combining a lot of data, makes a “stickiness” that traditional OTAs can’t match.

Crucially, this system is built on “trust”. Bain & Company reports that only 10% of consumers currently trust AI to complete high-value transactions. To bridge this gap, agents should be designed with “explainable logic.” When the Concierge recommends a hotel, it shows why: “I chose this hotel because it matches the visual style of your uploaded photo and is within walking distance of the jazz club you liked.” By engaging the user in a collaborative, transparent dialogue, we help travel brands build the confidence necessary to let the AI handle the credit card, moving from “Lookers” to “Bookers”.

Visual Search for Hotel Discovery

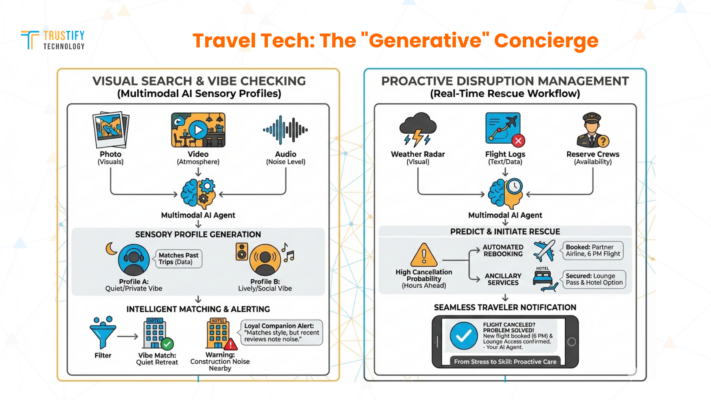

Finding a specific “vibe” in a hotel is often what hotel discovery is all about. This is a subjective quality that is hard to measure. Is this area “hipster” or “sketchy”? Is the hotel “lively” or “loud”? Visual Search with Multimodal AI can figure out what these vibes mean. Multimodal models can automate workflows by processing unstructured data. In this case, the workflow is “Vibe Checking.” The agent looks at user-generated content like pictures of street art, videos of local cafes, and audio clips of the noise level to make a “sensory profile” of the hotel’s location.

When someone looks for a hotel, the agent matches them not only on amenities but also on this sensory profile. If a user’s past trips (text/data) show that they like quiet, private places, the agent filters out hotels where the visual analysis shows busy street fronts or where audio data suggests noise at night. Accenture’s “Life Trends” says that AI is becoming a “loyal” companion. A loyal friend keeps you safe from bad things. The agent builds a lot of trust by warning the user, “This hotel matches your style, but recent reviews mention construction noise next door”.

Real-Time Disruption Management

Travel delays can make the whole experience worse. When a flight is canceled, the traveler gets a text, and then the scramble starts. This procedure is how traditional disruption management works. Multimodal AI’s “Real-Time Disruption Management” is proactive.

For instance, if the agent sees a line of thunderstorms moving toward a hub airport and there aren’t enough reserve crews, it will figure out that there is a good chance of cancellation hours ahead of time. It starts the “Rescue Workflow” right away. It looks for other flights on partner airlines. The agent looks at hotel availability for passengers who are stuck. It even talks about the rebooking fee ahead of time. iSeatz says that orchestration is crucial in this case because the agent needs to connect the GDS and the hotel switch to the airline’s operations system.

When the cancellation is finally announced, the traveler gets a push notification that says, “Don’t worry, your flight is canceled.” I have already booked you a seat on the 6 PM flight and gotten you a pass to the lounge while you wait. This technique makes a stressful situation into a show of skill. The AI’s full sensory awareness helps the travel provider keep the traveler safe by navigating the unpredictability of the real world.